Data Labeling

The Strategy Behind Smarter AI Training: Mastering Large-Scale Data Collection

The quality of the data used for training models is a fundamental factor that determines the success of AI-driven applications. AI models, whether they focus on Natural Language Processing (NLP), computer vision, or autonomous systems, require massive datasets to learn and generalize effectively. Training these models with high-quality, diverse, and large-scale data is crucial for ensuring accuracy, robustness, and versatility in real-world applications.

However, the process of gathering and preparing this data is fraught with challenges. This article explores the complexities of data collection for AI training and offers actionable strategies to overcome the associated challenges. By learning how to collect data for AI training efficiently, businesses can accelerate their AI development while maintaining high data quality.

Key Takeaways

- Data is the foundation of AI: AI models, whether focused on NLP, computer vision, or reinforcement learning, require vast amounts of high-quality, diverse data for effective training.

- The challenges of large-scale data collection: Gathering and preparing data for AI training is complex, involving issues like data variety, quality, privacy concerns, scalability, and labeling.

- Effective strategies for data collection: Key strategies include defining clear data requirements, leveraging automated tools, and incorporating crowdsourcing for scalable data labeling.

- Ensuring fairness and diversity: Bias in data can lead to unreliable and unfair AI models. Using fairness audits and bias detection tools helps mitigate these risks and ensures that AI models are developed with diverse, representative datasets.

Understanding AI Training Needs

AI training involves using data to teach machines to recognize patterns, make decisions, and improve over time. Whether it is machine learning (ML), deep learning, or reinforcement learning, the process revolves around feeding vast amounts of data into algorithms that "learn" from it.

Types of AI Models Requiring Large-Scale Data

For AI models to be effective, they must process vast amounts of data. Different types of AI models require different types of data, each with its own challenges and characteristics.

The Challenges of Large-Scale Data Collection

Data collection for AI training is not a simple task. The process comes with several challenges, each requiring specific strategies to overcome. As expert Dr. Tom Mitchell, a renowned professor of machine learning at Carnegie Mellon University, puts it:

"The success of AI models is directly linked to the diversity and quality of the data they're trained on. Models trained on biased or insufficient data will perform poorly in real-world applications."

Data Variety

One of the significant hurdles in large-scale data collection services is ensuring a diverse range of data types. AI systems require data from multiple sources and formats, such as text, images, audio, sensor data, and more. Each type of data demands specific tools and methods for efficient collection.

Data Privacy & Security

AI applications often deal with sensitive data, such as personal information or proprietary business data. Ensuring compliance with privacy regulations (e.g., GDPR) and safeguarding data from breaches are paramount.

Data Quality

The accuracy of AI models is directly influenced by the quality of the data they are trained on. Models trained on inaccurate, inconsistent, or incomplete data may produce unreliable or biased outcomes. According to a McKinsey report, 80% of AI project time is spent on data preparation and cleaning. Ensuring clean, accurate, and representative data is one of the most critical tasks in the AI training pipeline.

Scalability

As AI projects grow in scope, so too does the volume of data required. Managing large-scale data collection and analysis across various channels, such as cloud storage and distributed systems, poses scalability challenges. Businesses need tools and methods that can handle this growing demand for data while maintaining performance, ensuring that the data pipelines are efficient and flexible enough to scale.

Cost Management

Collecting, labeling, and storing data at scale is resource-intensive. Balancing data quality with budget constraints can be a difficult task. Also, processing large datasets, such as text datasets, demands significant computational resources, which can further escalate costs

Data Labeling

Data labeling, the process of tagging data with useful metadata, is one of the most time-consuming aspects of data collection. For high-quality training, data needs to be meticulously labeled to ensure the AI system receives correct and consistent information.

Strategies for Effective Data Collection

Effective data collection is the cornerstone of building successful AI models. However, simply gathering large volumes of data is not enough; it’s equally important to ensure that the data is both high-quality and tailored to the specific needs of the AI model. Data collection strategies can help businesses streamline the data collection process, ensuring efficiency while maintaining the integrity and relevance of the data.

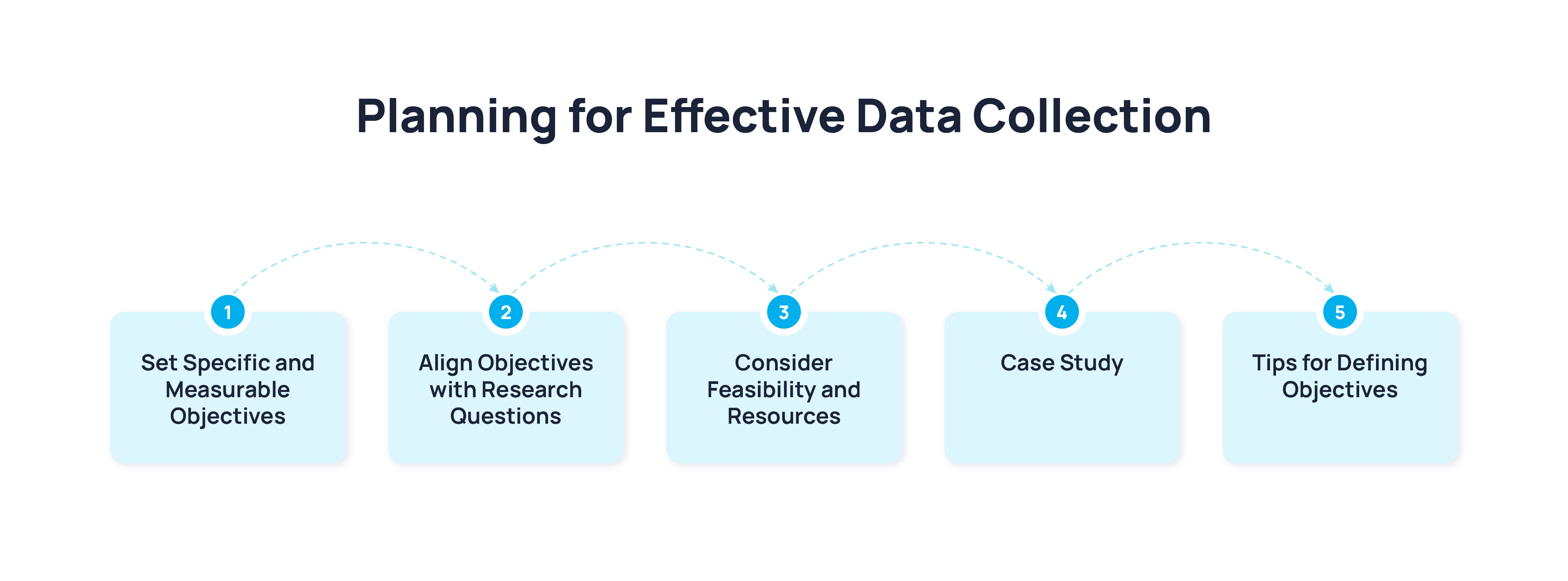

Establishing Clear Data Requirements

To optimize data collection for AI training, it's essential to define the specific data required for the project at hand. Identifying the right data sources, such as datasets for AI, proprietary databases, or crowdsourced contributions, will ensure that the collected data aligns with the AI model's needs.

Leveraging Automated Data Collection Tools

Building pipelines for large-scale data collection can significantly improve the speed and efficiency of data acquisition. And tools like web scrapers, APIs, and data pipelines enable businesses to gather data from multiple sources in real-time. These tools automate the extraction and processing of data, reducing manual effort and ensuring that the data is readily available for AI training.

The role of crowdsourcing also plays a vital role in scaling up data labeling. By tapping into a global network of data labelers, businesses can rapidly scale data labeling efforts to meet the demands of large AI projects.

Data Augmentation Techniques

To increase dataset diversity, data augmentation techniques such as synthetic data generation are useful, especially in areas like computer vision and NLP. For example, rotating, cropping, or adding noise to images can generate new training examples that help the model generalize better.

Managing and Storing Large Datasets

Storing and managing large datasets require robust cloud storage solutions like AWS, Google Cloud, or Microsoft Azure, which offer scalable environments that can grow with the project. Efficient database systems should be implemented for fast access and retrieval of the data, ensuring quick turnaround times for model training.

Ensuring Data Diversity and Bias Mitigation

Diverse datasets are vital for developing AI models that perform well across various demographic and cultural groups. Models trained on biased data risk perpetuating inequalities or making inaccurate predictions for underrepresented groups.

Using fairness audits and tools like bias detection algorithms helps ensure that AI models do not favor certain groups or perspectives over others. Regular assessments and updates to the dataset are necessary to mitigate biases.

Managing Data Through the AI Development Lifecycle

AI development is not a one-off project but an ongoing process. As models evolve, so too should the datasets that train them. Continuous monitoring and updates of data ensure that the model adapts to new trends, challenges, and changes in real-world data.

Mastering Large-Scale Data Collection for Smarter AI Models with Sapien

As businesses continue to develop and refine AI models, mastering large-scale data collection becomes crucial for AI success. Sapien’s advanced data collection and labeling solutions offer the infrastructure needed to collect, annotate, and process vast amounts of diverse data with speed and accuracy.

By using Sapien’s decentralized workforce, custom data collection methods, and cutting-edge data quality controls, businesses can overcome the challenges of large-scale data collection and ensure their AI models are trained on the best datasets available.

As AI continues to shape the future of technology, ensuring that your models are built on high-quality, diverse datasets is essential for success. Contact Sapien today to learn how we can help you master large-scale data collection for your AI projects and unlock the full potential of your AI models.

FAQs

How can businesses ensure the data they collect is diverse?

Businesses can use fairness audits, collect data from diverse sources, and employ techniques like data augmentation to ensure that their datasets represent a wide range of demographic and cultural groups.

What tools can help with automating data collection?

Web scrapers, APIs, and data pipelines are essential tools for automating data collection. These tools help businesses gather data from various online and offline sources efficiently.

How does data augmentation impact model performance in AI?

Data augmentation improves model performance by artificially increasing the size and diversity of the training dataset. In fields like computer vision, techniques such as image rotation, flipping, and noise addition help the model generalize better, improving accuracy when deployed in real-world scenarios.